My first reaction to this was: "And that's why I just got my $2,725 check of fileverse tokens now that fileverse has grown to the point where my dad regularly writes docs in fileverse that he sends to me" My second reaction to this was: "I see how this makes total sense from a crypto perspective, but it makes zero sense from an outside-of-crypto perspective ... hmm, what does this say about crypto?" My more detailed reaction: There are many distinct activities that you can refer to as "incentivizing users". First of all, paying some of your users with coins that your app gets by charging other users is totally fine: that's just a sustainable economic loop, there is nothing wrong with this. The activity that I think people are thinking about more is, paying all your users while the app is early, with the hope of "building network effect" and then making that money back (and much more) later when the app is mature. My general view, if you _really_ have to simplify it and sacrifice some nuances for the sake of brevity, is: * Incentives that compensate for unavoidable temporary costs that come from your thing being immature are good * Incentives that bring in totally new classes of users that would not use even a mature version of your thing without those incentives are bad For example, I have no problem with many types of defi liquidity rewards, because to me they compensate for per-year risk of the project being hacked or the team turning out to be scammers, a risk that is inherently higher for new projects and much lower once a project becomes more mature. Paying people to make tweets that get attention, might be the most "pure" example of the wrong thing to do, because you are going to get people who come to your platform to make tweets, with every incentive to game any mechanisms you have to judge quality and optimize for maximum laziness on their part, and then immediately disappear as soon as the incentives go away. In principle, content incentivization is a valuable and important problem, but it should be done with care, with an eye to quality over quantity, which are not natural goals that designers of "bootstrapping incentives" have by default. If fact, even if users do not disappear after incentives go away, there is a further problem: you succeed from the perspective of growing *quantity of community*, but you fail from the perspective of growing *quality of community*. In the case of defi protocols, you can argue: 1 ETH in an LP pool is 1 ETH doing useful work, regardless of whether it's put there by a cypherpunk or an amoral money maximizer. But, (i) this argument can only be made for defi, not for other areas like social, where esp. in the 2020s, quality matters more than quantity, and (ii) there are always subtle ways in which higher-quality community members help your protocol more in the long term (eg. by writing open-source tools, answering people's questions in online or offline forums, being potential developers on your team). The ideal incentive is an incentive that exactly compensates for temporary downsides of your protocol, those downsides that will disappear once the protocol has more maturity, and attracts zero users who would not be there organically once the protocol is mature. Charging users fees, but paying them back in protocol tokens, I think is also reasonable: it's effectively turning your users into your investors by default, which seems like a good thing to do. A further more cynical take I have is that in the 2021-24 era, the "real product" was creating a speculative bubble, and so the real function of many incentives was to pump up narratives to justify the narrative for the bubble. So any argument that incentives are good for bootstrapping acquisition should be not judged on the question of whether it's plausible, but on the question of whether it's more plausible than the alternative claim that it's all galaxy brain justification ( https://vitalik.eth.limo/general/2025/11/07/galaxybrain.html ) for a "pump and dump wearing a suit". TLDR: the bulk of the effort should be on making an actually-useful app. This was historically ignored, because it's not necessary for narrative engineering to create a speculative bubble. But now it is necessary. And we do see that the successful apps now, the apps that we actually most appreciate and respect, do the bulk of their user acquisition work in that way, not by paying users to come in indiscriminately. https://firefly.social/post/x/2021632354649821275

This is the right way to "make Ethereum be the home for AI". Don't just do what everyone else would do anyway, just on rails with an octahedron logo instead of a square or circle or pentagon logo. Make something fundamentally better, using meaningful technological improvements in ZK privacy-preserving payments and reputation. https://firefly.social/post/x/2021579464589688847

Good speech by Cory Doctorow Let's repeal anti-circumvention laws. Nothing should be updateable without the user's permission, nothing critical should be a black box. https://www.youtube.com/watch?v=3C1Gnxhfok0

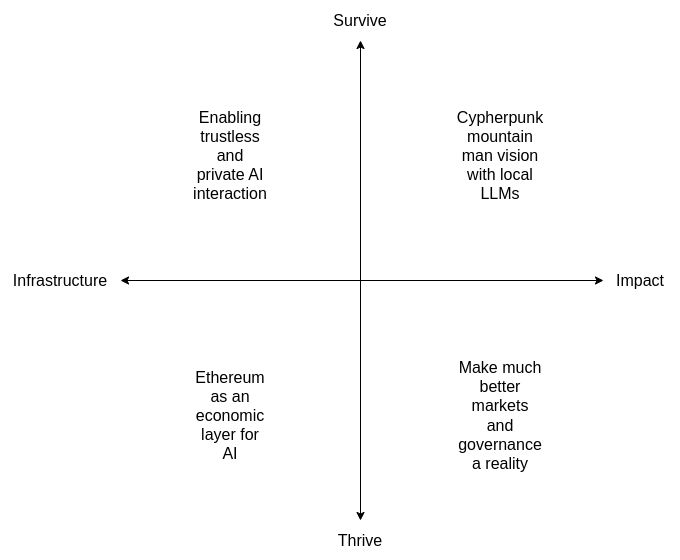

Two years ago, I wrote this post on the possible areas that I see for ethereum + AI intersections: https://vitalik.eth.limo/general/2024/01/30/cryptoai.html This is a topic that many people are excited about, but where I always worry that we think about the two from completely separate philosophical perspectives. I am reminded of Toly's recent tweet that I should "work on AGI". I appreciate the compliment, for him to think that I am capable of contributing to such a lofty thing. However, I get this feeling that the frame of "work on AGI" itself contains an error: it is fundamentally undifferentiated, and has the connotation of "do the thing that, if you don't do it, someone else will do anyway two months later; the main difference is that you get to be the one at the top" (though this may not have been Toly's intention). It would be like describing Ethereum as "working in finance" or "working on computing". To me, Ethereum, and my own view of how our civilization should do AGI, are precisely about choosing a positive direction rather than embracing undifferentiated acceleration of the arrow, and also I think it's actually important to integrate the crypto and AI perspectives. I want an AI future where: * We foster human freedom and empowerment (ie. we avoid both humans being relegated to retirement by AIs, and permanently stripped of power by human power structures that become impossible to surpass or escape) * The world does not blow up (both "classic" superintelligent AI doom, and more chaotic scenarios from various forms of offense outpacing defense, cf. the four defense quadrants from the d/acc posts) In the long term, this may involve crazy things like humans uploading or merging with AI, for those who want to be able to keep up with highly intelligent entities that can think a million times faster on silicon substrate. In the shorter term, it involves much more "ordinary" ideas, but still ideas that require deep rethinking compared to previous computing paradigms. So now, my updated view, which definitely focuses on that shorter term, and where Ethereum plays an important role but is only one piece of a bigger puzzle: # Building tooling to make more trustless and/or private interaction with AIs possible. This includes: * Local LLM tooling * ZK-payment for API calls (so you can call remote models without linking your identity from call to call) * Ongoing work into cryptographic ways to improve AI privacy * Client-side verification of cryptographic proofs, TEE attestations, and any other forms of server-side assurance Basically, the kinds of things we might also build for non-LLM compute (see eg. my ethereum privacy roadmap from a year ago https://ethereum-magicians.org/t/a-maximally-simple-l1-privacy-roadmap/23459 ), but for LLM calls as the compute we are protecting. # Ethereum as an economic layer for AI-related interactions This includes: * API calls * Bots hiring bots * Security deposits, potentially eventually more complicated contraptions like onchain dispute resolution * ERC-8004, AI reputation ideas The goal here is to enable AIs to interact economically, which makes viable more decentralized AI architectures (as opposed to non-economic coordination between AIs that are all designed and run by one organization "in-house"). Economies not for the sake of economies, but to enable more decentralized authority. # Make the cypherpunk "mountain man" vision a reality Basically, take the vision that cypherpunk radicals have always dreamed of (don't trust; verify everything), that has been nonviable in reality because humans are never actually going to verify all the code ourselves. Now, we can finally make that vision happen, with LLMs doing the hard parts. This includes: * Interacting with ethereum apps without needing third party UIs * Having a local model propose transactions for you on its own * Having a local model verify transactions created by dapp UIs * Local smart contract auditing, and assistance interpreting the meaning of FV proofs provided by others * Verifying trust models of applications and protocols # Make much better markets and governance a reality Prediction and decision markets, decentralized governance, quadratic voting, combinatorial auctions, universal barter economy, and all kinds of constructions are all beautiful in theory, but have been greatly hampered in reality by one big constraint: limits to human attention and decision-making power. LLMs remove that limitation, and massively scale human judgement. Hence, we can revisit all of those ideas. These are all things that Ethereum can help to make a reality. They are also ideas that are in the d/acc spirit: enabling decentralized cooperation, and improving defense. We can revisit the best ideas from 2014, and add on top many more new and better ones, and with AI (and ZK) we have a whole new set of tools to make them come to life. We can describe the above as a 2x2 chart. There's a lot to build!

One reason (not the only reason) why I see people sometimes hesitating to switch more fully to Signal, is that they got into a habit of using Signal as their "high-priority, clutter-free" inbox and Telegram as their "everyone else talk to me" inbox, and thus hesitate to make Signal "cluttered" again. Things that I find work for me in this situation is: * Signal now has a folders feature: you can right-click (if on phone, tap-and-hold) on a contact and an "Add to folder" option appears * Using *another* app (eg. Session, Simplex) as your high-signal inbox, this way support projects that are trying to achieve even stronger privacy properties Also, start to build a habit: when someone contacts you on Telegram, ask: "are you on Signal? I am <username> there", and move that conversation over to Signal.

I am capitulating, I will call Twitter "X" from now on. "Tweet" becomes a generic term for messages on any platform that prioritizes short-form text content with X-like UX, similar linguistically to "kleenex". Usage guide: * "Vitalik is only tweeting on Farcaster and Lens this year, isn't it awful how much of an ivory tower elitist he is?" * "Did you see Donald Trump's shocking new racist tweet on Truth Social?" * "Back when I sent that in 2019, it was a tweet, and by this new definition it still is, but now we can also refer to it ex-post as an X post" "Crypto Twitter" can remain as a set phrase, similarly to how Peking Duck is still called Peking Duck, though of course it would be funny if more people called it CryptoX (aka CrypTox). (This tweet was sent simultaneously on X, Farcaster and Lens via Firefly)

I love how people refer to quantum-resistant cryptography as "pq" when actually it's all about moving away from having to juggle F_p and F_q.

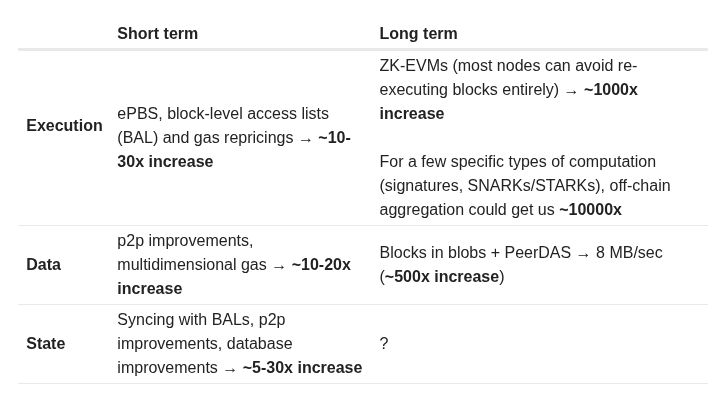

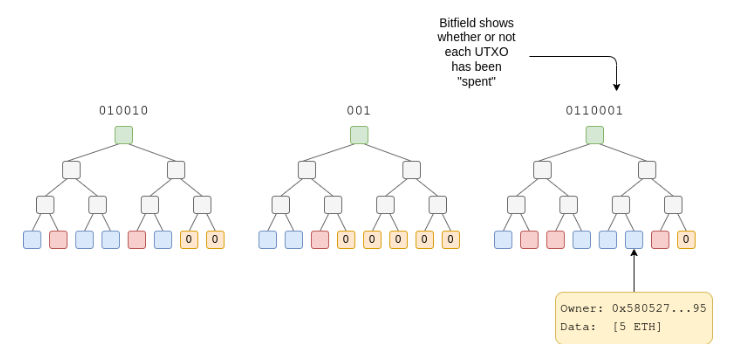

Hyper-scaling Ethereum state by creating new forms of state: https://ethresear.ch/t/hyper-scaling-state-by-creating-new-forms-of-state/24052 Summary: * We want 1000x scale on Ethereum L1. We roughly know how to do this for execution and data. But scaling state is fundamentally harder. * The most practical path for Ethereum may actually be to scale existing state only a medium amount, and at the same time introduce newer forms of state that would be extremely cheap but also more restrictive in how you can use them. * In such a design, the present-day state tree would over time become dominated by user accounts, defi hub contracts, code, and other high-value objects, while all kinds of individual per-user state objects (eg. ERC20s balances, NFTs, CDPs) would be handled with cheaper but more restrictive tools. Making the developer abstractions to make this easy to implement for the use cases that make up >90% of state today seems very doable.

The word "grapefruit" is objectively ridiculous. It's like if there was an animal that we agreed to call a "dogmammal", that had nothing to do with dogs.

Have been following reactions to what I said about L2s about 1.5 days ago. One important thing that I believe is: "make yet another EVM chain and add an optimistic bridge to Ethereum with a 1 week delay" is to infra what forking Compound is to governance - something we've done far too much for far too long, because we got comfortable, and which has sapped our imagination and put us in a dead end. If you make an EVM chain *without* an optimistic bridge to Ethereum (aka an alt L1), that's even worse. We don't friggin need more copypasta EVM chains, and we definitely don't need even more L1s. L1 is scaling and is going to bring lots of EVM blockspace - not infinite (AIs in particular will need both more blockspace and lower latency than even a greatly scaled L1 can offer), but lots. Build something that brings something new to the table. I gave a few examples: privacy, app-specific efficiency, ultra-low latency, but my list is surely very incomplete. A second important thing that I believe is: regarding "connection to Ethereum", vibes need to match substance. I personally am a fan of many of the things that can be called "app chains". For example I think there's a large chance that the optimal architecture for prediction markets is something like: the market gets issued and resolved on L1, user accounts are on L1, but trading happens on some based rollup or other L2-like system, where the execution reads the L1 to verify signatures and markets. I like architectures where deep connection to L1 is first-class, and not an afterthought ("we're pretty much a separate chain, but oh yeah, we have a bridge, and ok fine let's put 1-2 devs to get it to stage 1 so the l2beat people will put a green checkmark on it so vitalik likes us"). The other extreme of "app chain", eg. the version where you convince some government registry, or social media platform, or gaming thing, to start putting merkle roots of its database, with STARKs that prove every update was authorized and signed and executed according to a pre-committed algorithm, onchain, is also reasonable - this is what makes the most sense to me in terms of "institutional L2s". It's obviously not Ethereum, not credibly neutral and not trustless - the operator can always just choose to say "we're switching to a different version with different rules now". But it would enable verifiable algorithmic transparency, a property that many of us would love to see in government, social media algorithms or wherever else, and it may enable economic activity that would otherwise not be possible. I think if you're the first thing, it's valid and great to call yourself an Ethereum application - it can't survive without Ethereum even technologically, it maximizes interoperability and composability with other Ethereum applications. If you're the second thing, then you're not Ethereum, but you are (i) bringing humanity more algorithmic transparency and trust minimization, so you're pursuing a similar vision, and (ii) depending on details probably synergistic with Ethereum. So you should just say those things directly! Basically: 1. Do something that brings something actually new to the table. 2. Vibes should match substance - the degree of connection to Ethereum in your public image should reflect the degree of connection to Ethereum that your thing has in reality.